Phase semantics

Remi Nollet (Talk | contribs) m (“absorbant” ~> “absorbing”) |

|||

| (3 intermediate revisions by 2 users not shown) | |||

| Line 192: | Line 192: | ||

{{Lemma| |

{{Lemma| |

||

* The operations <math>\tens</math>, <math>\parr</math>, <math>\plus</math> and <math>\with</math> are commutative and associative, |

* The operations <math>\tens</math>, <math>\parr</math>, <math>\plus</math> and <math>\with</math> are commutative and associative, |

||

| − | * They have respectively <math>\one</math>, <math>\bot</math>, <math>\zero</math> and <math>\top</math> for neutral element, |

+ | * They have respectively <math>\one</math>, <math>\bot</math>, <math>\zero</math> and <math>\top</math> as neutral element, |

| − | * <math>\zero</math> is absorbant for <math>\tens</math>, |

+ | * <math>\zero</math> is absorbing for <math>\tens</math>, |

| − | * <math>\top</math> is absorbant for <math>\parr</math>, |

+ | * <math>\top</math> is absorbing for <math>\parr</math>, |

* <math>\tens</math> distributes over <math>\plus</math>, |

* <math>\tens</math> distributes over <math>\plus</math>, |

||

* <math>\parr</math> distributes over <math>\with</math>. |

* <math>\parr</math> distributes over <math>\with</math>. |

||

| Line 216: | Line 216: | ||

{{Definition|Let <math>(X, 1, \cdot)</math> be a commutative monoid. |

{{Definition|Let <math>(X, 1, \cdot)</math> be a commutative monoid. |

||

| − | Given a formula <math>A</math> of linear logic and an assignation <math>\rho</math> that associate a fact to any variable, we can inductively define the interpretation <math>\sem{A}_\rho</math> of <math>A</math> in <math>X</math> as one would expect. Interpretation is lifted to sequents as <math>\sem{A_1, \hdots, A_n}_\rho = \sem{A_1}_\rho \parr \hdots \parr \sem{A_n}_\rho</math>.}} |

+ | Given a formula <math>A</math> of linear logic and an assignation <math>\rho</math> that associate a fact to any variable, we can inductively define the interpretation <math>\sem{A}_\rho</math> of <math>A</math> in <math>X</math> as one would expect. Interpretation is lifted to sequents as <math>\sem{A_1, \dots, A_n}_\rho = \sem{A_1}_\rho \parr \cdots \parr \sem{A_n}_\rho</math>.}} |

| − | {{Theorem|Let <math>\Gamma</math> be a provable sequent in linear logic. Then <math>1_X \in \sem{\Gamma}.}} |

+ | {{Theorem|Let <math>\Gamma</math> be a provable sequent in linear logic. Then <math>1_X \in \sem{\Gamma}</math>.}} |

{{Proof|By induction on <math>\vdash\Gamma</math>.}} |

{{Proof|By induction on <math>\vdash\Gamma</math>.}} |

||

| Line 242: | Line 242: | ||

{{Definition|The '''syntactic assignation''' is the assignation that sends any variable <math>\alpha</math> to the fact <math>\{\alpha\}\orth</math>.}} |

{{Definition|The '''syntactic assignation''' is the assignation that sends any variable <math>\alpha</math> to the fact <math>\{\alpha\}\orth</math>.}} |

||

| − | We instantiate the pole as <math>\Bot</math><math> := \{\Gamma \mid \vdash\Gamma\}</math>. |

+ | We instantiate the pole as <math>\Bot := \{\Gamma \mid \vdash\Gamma\}</math>. |

{{Theorem|If <math>\Gamma\in\sem{\Gamma}\orth</math>, then <math>\vdash\Gamma</math>.}} |

{{Theorem|If <math>\Gamma\in\sem{\Gamma}\orth</math>, then <math>\vdash\Gamma</math>.}} |

||

Latest revision as of 14:55, 22 January 2020

Contents |

[edit] Introduction

The semantics given by phase spaces is a kind of "formula and provability semantics", and is thus quite different in spirit from the more usual denotational semantics of linear logic. (Those are rather some "formulas and proofs semantics".)

--- probably a whole lot more of blabla to put here... ---

[edit] Preliminaries: relation and closure operators

Part of the structure obtained from phase semantics works in a very general framework and relies solely on the notion of relation between two sets.

[edit] Relations and operators on subsets

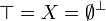

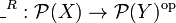

The starting point of phase semantics is the notion of duality. The structure needed to talk about duality is very simple: one just needs a relation R between two sets X and Y. Using standard mathematical practice, we can write either  or

or  to say that

to say that  and

and  are related.

are related.

Definition

If  is a relation, we write

is a relation, we write  for the converse relation:

for the converse relation:  iff

iff  .

.

Such a relation yields three interesting operators sending subsets of X to subsets of Y:

Definition

Let  be a relation, define the operators

be a relation, define the operators  , [R] and _R taking subsets of X to subsets of Y as follows:

, [R] and _R taking subsets of X to subsets of Y as follows:

-

iff

iff

-

](/mediawiki/images/math/d/0/7/d07a7a5791bf9a30f7930c3262374a63.png) iff

iff

-

iff

iff

The operator  is usually called the direct image of the relation, [R] is sometimes called the universal image of the relation.

is usually called the direct image of the relation, [R] is sometimes called the universal image of the relation.

It is trivial to check that  and [R] are covariant (increasing for the

and [R] are covariant (increasing for the  relation) while _R is contravariant (decreasing for the

relation) while _R is contravariant (decreasing for the  relation). More interesting:

relation). More interesting:

Lemma (Galois Connections)

-

is right-adjoint to [R˜]: for any

is right-adjoint to [R˜]: for any  and

and  , we have

, we have ![[R^\sim]y \subseteq x](/mediawiki/images/math/e/c/8/ec870ce68fdcd3a37af2e07d062e18e6.png) iff

iff

- we have

iff

iff

This implies directly that  commutes with arbitrary unions and [R] commutes with arbitrary intersections. (And in fact, any operator commuting with arbitrary unions (resp. intersections) is of the form

commutes with arbitrary unions and [R] commutes with arbitrary intersections. (And in fact, any operator commuting with arbitrary unions (resp. intersections) is of the form  (resp. [R]).

(resp. [R]).

Remark: the operator _R sends unions to intersections because  is right adjoint to

is right adjoint to  ...

...

[edit] Closure operators

Definition

A closure operator on  is a monotonic operator P on the subsets of X which satisfies:

is a monotonic operator P on the subsets of X which satisfies:

- for all

, we have

, we have

- for all

, we have

, we have

Closure operators are quite common in mathematics and computer science. They correspond exactly to the notion of monad on a preorder...

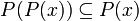

It follows directly from the definition that for any closure operator P, the image P(x) is a fixed point of P. Moreover:

Lemma

P(x) is the smallest fixed point of P containing x.

One other important property is the following:

Lemma

Write  for the collection of fixed points of a closure operator P. We have that

for the collection of fixed points of a closure operator P. We have that  is a complete inf-lattice.

is a complete inf-lattice.

Remark:

A closure operator is in fact determined by its set of fixed points: we have

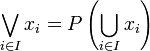

Since any complete inf-lattice is automatically a complete sup-lattice,  is also a complete sup-lattice. However, the sup operation isn't given by plain union:

is also a complete sup-lattice. However, the sup operation isn't given by plain union:

Lemma

If P is a closure operator on  , and if

, and if  is a (possibly infinite) family of subsets of X, we write

is a (possibly infinite) family of subsets of X, we write  .

.

We have  is a complete lattice.

is a complete lattice.

Proof. easy.

A rather direct consequence of the Galois connections of the previous section is:

Lemma

The operator and ![\langle R\rangle \circ [R^\sim]](/mediawiki/images/math/9/8/3/98308747bc920bef92643b3fcc53ee83.png) and the operator

and the operator  are closures.

are closures.

A last trivial lemma:

Lemma

We have  .

.

As a consequence, a subset  is in

is in  iff it is of the form

iff it is of the form  .

.

Remark: everything gets a little simpler when R is a symmetric relation on X.

[edit] Phase Semantics

[edit] Phase spaces

Definition (monoid)

A monoid is simply a set X equipped with a binary operation  s.t.:

s.t.:

- the operation is associative

- there is a neutral element

The monoid is commutative when the binary operation is commutative.

Definition (Phase space)

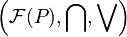

A phase space is given by:

- a commutative monoid

,

,

- together with a subset

.

.

The elements of X are called phases.

We write  for the relation

for the relation  . This relation is symmetric.

. This relation is symmetric.

A fact in a phase space is simply a fixed point for the closure operator  .

.

Thanks to the preliminary work, we have:

Corollary

The set of facts of a phase space is a complete lattice where:

-

is simply

is simply  ,

,

-

is

is  .

.

[edit] Additive connectives

The previous corollary makes the following definition correct:

Definition (additive connectives)

If  is a phase space, we define the following facts and operations on facts:

is a phase space, we define the following facts and operations on facts:

Once again, the next lemma follows from previous observations:

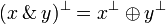

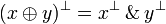

Lemma (additive de Morgan laws)

We have

[edit] Multiplicative connectives

In order to define the multiplicative connectives, we actually need to use the monoid structure of our phase space. One interpretation that is reminiscent in phase semantics is that our spaces are collections of tests / programs / proofs / strategies that can interact with each other. The result of the interaction between a and b is simply  .

.

The set  can be thought of as the set of "good" things, and we thus have

can be thought of as the set of "good" things, and we thus have  iff "a interacts correctly with all the elements of x".

iff "a interacts correctly with all the elements of x".

Definition

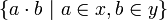

If x and y are two subsets of a phase space, we write  for the set

for the set  .

.

Thus  contains all the possible interactions between one element of x and one element of y.

contains all the possible interactions between one element of x and one element of y.

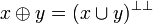

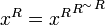

The tensor connective of linear logic is now defined as:

Definition (multiplicative connectives)

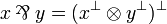

If x and y are facts in a phase space, we define

-

;

;

-

;

;

- the tensor

to be the fact

to be the fact  ;

;

- the par connective is the de Morgan dual of the tensor:

;

;

- the linear arrow is just

.

.

Note that by unfolding the definition of  , we have the following, "intuitive" definition of

, we have the following, "intuitive" definition of  :

:

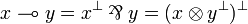

Lemma

If x and y are facts, we have  iff

iff

Proof. easy exercise.

Readers familiar with realisability will appreciate...

Remark:

Some people say that this idea of orthogonality was implicitly present in Tait's proof of strong normalisation. More recently, Jean-Louis Krivine and Alexandre Miquel have used the idea explicitly to do realisability...

[edit] Properties

All the expected properties hold:

Lemma

- The operations

,

,  ,

,  and

and  are commutative and associative,

are commutative and associative,

- They have respectively

,

,  ,

,  and

and  as neutral element,

as neutral element,

-

is absorbing for

is absorbing for  ,

,

-

is absorbing for

is absorbing for  ,

,

-

distributes over

distributes over  ,

,

-

distributes over

distributes over  .

.

[edit] Exponentials

Definition (Exponentials)

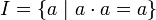

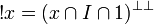

Write I for the set of idempotents of a phase space:  . We put:

. We put:

-

,

,

-

.

.

This definition captures precisely the intuition behind the exponentials:

- we need to have contraction, hence we restrict to indempotents in x,

- and weakening, hence we restrict to

.

.

Since I isn't necessarily a fact, we then take the biorthogonal to get a fact...

[edit] Soundness

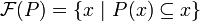

Definition

Let  be a commutative monoid.

be a commutative monoid.

Given a formula A of linear logic and an assignation ρ that associate a fact to any variable, we can inductively define the interpretation  of A in X as one would expect. Interpretation is lifted to sequents as

of A in X as one would expect. Interpretation is lifted to sequents as  .

.

Theorem

Let Γ be a provable sequent in linear logic. Then  .

.

Proof. By induction on  .

.

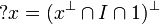

[edit] Completeness

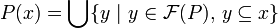

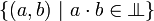

Phase semantics is complete w.r.t. linear logic. In order to prove this, we need to build a particular commutative monoid.

Definition

We define the syntactic monoid as follows:

- Its elements are sequents Γ quotiented by the equivalence relation

generated by the rules:

generated by the rules:

-

if Γ is a permutation of Δ

if Γ is a permutation of Δ

-

-

- Product is concatenation:

- Neutral element is the empty sequent:

.

.

The equivalence relation intuitively means that we do not care about the multiplicity of  -formulae.

-formulae.

Lemma

The syntactic monoid is indeed a commutative monoid.

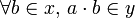

Definition

The syntactic assignation is the assignation that sends any variable α to the fact  .

.

We instantiate the pole as  .

.

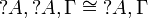

Theorem

If  , then

, then  .

.

Proof. By induction on Γ.

[edit] Cut elimination

Actually, the completeness result is stronger, as the proof does not use the cut-rule in the reconstruction of  . By refining the pole as the set of cut-free provable formulae, we get:

. By refining the pole as the set of cut-free provable formulae, we get:

Theorem

If  , then Γ is cut-free provable.

, then Γ is cut-free provable.

From soundness, one can retrieve the cut-elimination theorem.

Corollary

Linear logic enjoys the cut-elimination property.